Ollama: The Open-Source Solution for Building A ChatGPT Alternative

ChatGPT at home

ChatGPT and Bard (now Gemini) have revolutionized AI interactions, but what if you could create your own AI language model? This tutorial will guide you through building your very own AI assistant, similar to ChatGPT, using the open-source Ollama framework.

Ollama.Ai: Your Open-Source AI Solution

Ollama.ai is an open-source framework that allows you to run large language models (LLMs) locally on your computer. It provides a user-friendly interface for interacting with LLMs, making it easy to generate text, translate languages, and write various creative content. Ollama supports a variety of pre-trained LLMs, including Llama 2, CodeLlama, and Orca-Mini, and it also allows you to train your own custom LLMs.

Here are some of the key features of Ollama:

- Ease of Use: Ollama’s simple API makes it straightforward to load, run, and interact with LLMs. You can quickly get started with basic tasks without extensive coding knowledge.

- Flexibility: Ollama offers a versatile platform for exploring various applications of LLMs. You can use it for text generation, language translation, creative writing, and more.

- Powerful LLMs: Ollama includes pre-trained LLMs like Llama 2, renowned for its large size and capabilities. It also supports training custom LLMs tailored to your specific needs.

- Local Execution: Ollama enables you to run LLMs locally on your device, enhancing privacy and control over your data. You don’t rely on cloud-based services and avoid potential latency issues.

- Community Support: Ollama actively participates in the LLM community, providing documentation, tutorials, and open-source code to facilitate collaboration and knowledge sharing.

Overall, Ollama.ai stands as a valuable tool for researchers, developers, and anyone interested in exploring the potential of large language models without the complexities of cloud-based platforms. Its ease of use, flexibility, and powerful LLMs make it accessible to a wide range of users.

How to Install and Use Ollama.ai

Pre-requistes

According to the official Ollama.ai documentation, the recommended system requirements for running Ollama are:

| Operating System: | Linux: Ubuntu 18.04 or later macOS: macOS 11 Big Sur or later |

| RAM: | 8GB for running 3B models 16GB for running 7B models 32GB for running 13B models |

| Disk Space: | 12GB for installing Ollama and the base models Additional space required for storing model data, depending on the models you use |

| CPU: | Any modern CPU with at least 4 cores is recommended For running 13B models, a CPU with at least 8 cores is recommended. |

| GPU: (Optional) | A GPU is not required for running Ollama, but it can improve performance, especially for running larger models. If you have a GPU, you can use it to accelerate training of custom models. |

In addition to the above, Ollama also requires a working internet connection to download the base models and install updates.

Step 1 – Install Ollama.ai

(Note: As of December 2023, Ollama.ai officially supports only macOS and Linux.)

To install Ollami.ai, simply run the following commands:

- Check you have curl installed

apt install curl -yor

yum install curl -y

or

dnf install curl -y- Run the installation script:

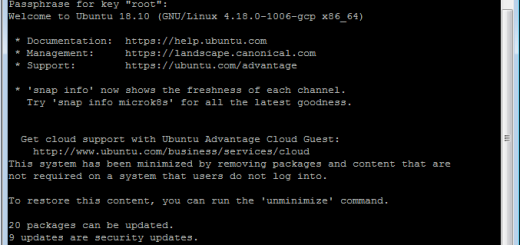

curl https://ollama.ai/install.sh | shYou should see the following output:

curl https://ollama.ai/install.sh | sh

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 7494 0 7494 0 0 27786 0 --:--:-- --:--:-- --:--:-- 27858

Downloading ollama…

################################################################## 100.0%##O#-#

Installing ollama to /usr/local/bin…

[sudo] password for rbailey:

Creating ollama systemd service…

Enabling and starting ollama service…

WARNING: No NVIDIA GPU detected. Ollama will run in CPU-only mode.Step 2 – Configure an AI model

Browse this website to view the available AI models that work with Ollama – https://ollama.ai/library

I am going to use Codellama – see here for more info.

ollama run codellamaNote: If you hit an error, try pulling the Codellama repo before running it

ollama pull codellama:latestStep 3 – Query the AI model

Now you can start conversing with your AI! Try asking it to write code, answer questions, or even generate creative content.

Here is an example of Codellama creating a bash script to interact with the Okta API

>>> Write me a bash script that will create a new user via the Okta API

To create a new user via the Okta API, you can use the `curl` command to make an HTTP request to the `/api/v1/users` endpoint. """

---

```bash

#!/bin/bash

# Set the variables for the API request

API_URL="https://your-okta-domain.com"

API_TOKEN="your_api_token"

USERNAME="new_user_name"

PASSWORD="strong_password"

EMAIL="[email protected]"

# Create the user via the API

curl -X POST \

"${API_URL}/api/v1/users" \

-H "Content-Type: application/json" \

-H "Authorization: SSWS ${API_TOKEN}" \

-d "{

\"profile\": {

\"firstName\": \"${USERNAME}\",

\"email\": \"${EMAIL}\"

},

\"credentials\": {

\"password\": {

\"value\": \"${PASSWORD}\"

}

}

}"

```

This script assumes that you have already set up an API token for your Okta account. If you haven't, you can do so by following the instructions in the Okta documentation.

Once you have the API token set up, you can use this script to create a new user via the Okta API. Simply replace the `your_api_token` placeholder with the actual value of your API token, and run

the script as is. The script will create a new user with the specified username, password, and email address.

Note that this script uses the `curl` command to make an HTTP request to the `/api/v1/users` endpoint. If you don't have `curl` installed on your system, you can install it by running the following

command:

```bash

sudo apt-get install curl

Ollama Q&A

Q: What sets Ollama.ai apart from other AI frameworks like ChatGPT and Bard?

A: Ollama.ai distinguishes itself by being an open-source framework that allows users to run large language models (LLMs) locally. This provides a unique advantage in terms of privacy, control over data, and the ability to operate without reliance on cloud-based services.

Q: How user-friendly is Ollama.ai for those without extensive coding knowledge?

A: Ollama.ai prides itself on its simplicity, featuring a straightforward API that makes loading, running, and interacting with LLMs accessible even for users with limited coding expertise. This ease of use facilitates quick engagement in basic tasks without a steep learning curve.

Q: What are the standout features of Ollama.ai that make it a valuable tool for users?

A: Ollama.ai offers a versatile platform with powerful pre-trained LLMs like Llama 2, CodeLlama, and Orca-Mini. Its flexibility allows users to explore applications such as text generation, language translation, and creative writing. Additionally, the support for custom LLM training caters to specific user needs.

Q: How does Ollama.ai contribute to user privacy and control over data?

A: Ollama.ai allows users to run LLMs locally on their devices, enhancing privacy by avoiding reliance on cloud-based platforms. This not only provides users with increased control over their data but also eliminates potential latency issues associated with cloud services.

Q: Can you share the recommended system requirements for installing Ollama.ai?

A: According to the official Ollama.ai documentation, the recommended system requirements include operating systems such as Ubuntu 18.04 or later for Linux and macOS 11 Big Sur or later for macOS. The requirements also specify RAM, disk space, CPU, and, optionally, GPU specifications based on the desired model size.

Q: What steps are involved in installing Ollama.ai on a system?

A: To install Ollama.ai, users need to follow a straightforward process. They should ensure the fulfillment of prerequisites, including system requirements, and then run specific commands for installation. The process involves checking for the presence of curl, running the installation script, and configuring the AI model based on user preferences.

Q: Which AI models are compatible with Ollama.ai, and where can users find them?

A: Ollama.ai supports various AI models, including Codellama. Users can explore the available models on the official Ollama.ai library website (https://ollama.ai/library) to find detailed information about each model and choose the one that best suits their needs.

Q: How does Ollama.ai facilitate user queries and interactions with AI models?

A: Ollama.ai provides a user-friendly interface for querying AI models. Users can interact with the models by using simple commands, as demonstrated in the article with the example of Codellama creating a bash script to interact with the Okta API.

Recent Comments